I find RAVE one of the most inspiring technologies for (neural) audio synthesis and sound creation at the moment. What fascinates me in particular are the models’ (highly desirable) hallucinations, their interpretation and output especially on chaotic input.

For various months now, I’ve been experimenting – more or less systematically – with different sets of training data and hyperparameters as well as different ways to control the models’ behaviour and output in real time environments. Even though, a lot of time has been invested in this process, it still feels like I’ve merely scratched the surface of its creative potential.

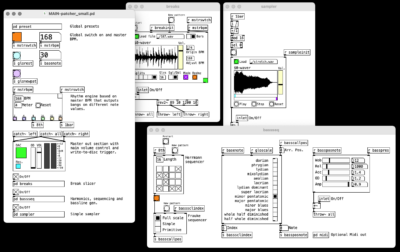

I’ve started documenting a few recent experiments via screen capture showing patches and setups in Pure Data in order to open the dialogue to other artists and researchers currently working with the technology.

In the video above, I’m showcasing an exemplary dynamic control and audio setup in Pure Data that allows playing with RAVE models in a mixture of style transfer and direct latent manipulation. The purpose of this setup was to both generate rhythmically repetitive information as well as allowing the model to hallucinate and dissolve this repetitiveness to an extent that produces new patterns.

The models used in this video have been trained on a selection of my tracks. I’m using the decoder of a model trained on Martsman tracks with certain more spacious characteristics and the encoder unit of a model trained on my Anthone body of work.

In the video above, I’m playing around with an MSPrior model I created based on a RAVE model I trained on a selection of Martsman tracks.

MSPrior is a multi(scale/stream) prior model architecture for realtime temporal learning also created by Antoine Caillon. The prior works as an unconditional autoregressive model. The value of a variable at a given time step is predicted based on its past values. The “unconditional” aspect implies that the model makes predictions solely based on historical data without incorporating any external factors, therefore it generates new output based on its earlier self-generated sample data.

More experiments with RAVE and neural audio synthesis can be found here.

Sources

- RAVE on GitHub

- MSPrior on GitHub

- To train MSPrior and RAVE on Colab or Kaggle, you can use these Jupyter notebooks I’ve created